Learning Multiagent Communication with Backpropagation

Welcome back to the series! Previously, we explored (DIAL) (and its sibling RIAL), which demonstrated how reinforcement-learning (RL) agents can learn a communication protocol from scratch. Yet these methods also revealed some challenges—particularly the difficulty of discrete message spaces (RIAL) and the complexities of partial differentiability (DIAL).

From RIAL/DIAL to CommNet

- RIAL used discrete messages as actions, forcing each agent to explore a large space of possible signals by trial-and-error—no direct backprop could flow between agents.

- DIAL addressed this by making message-passing differentiable—at least during training—yet still discretized messages at test time. This provided end-to-end gradient flow for part of the pipeline, but had some added complexity with the discretize-regularize mechanism.

- CommNet (“Learning Multiagent Communication with Backpropagation” by Sukhbaatar, Szlam, and Fergus, 2016) leans fully into continuous communication. Instead of discrete message symbols, CommNet uses continuous neural activations to pass information among agents.

Benefits of CommNet:

- Full End-to-End Gradients: The communication channel is just another part of the neural network, so backpropagation easily shapes both how agents send and interpret messages.

- Modular & Scalable: Because CommNet aggregates the hidden states of all agents, you can often add or remove agents without redesigning the entire system.

1. Why CommNet?

In many multi-agent RL problems, multiple agents must coordinate on tasks like foraging, navigation, or traffic management. Handcrafting communication protocols can be cumbersome—especially in large, partially observed environments. As the authors put it:

“Many tasks in AI require the collaboration of multiple agents. Typically, the communication protocol between agents is manually specified and not altered during training.”

CommNet discards any notion of a pre-specified protocol. Instead, it learns both what to say and how to use incoming messages—using standard backpropagation.

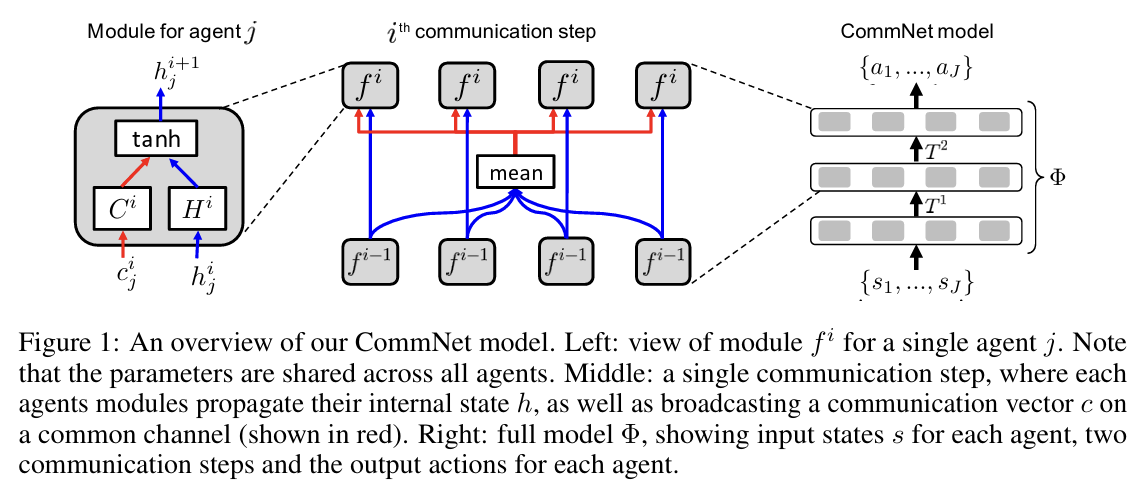

2. Core Idea: A Broadcast Communication Channel

CommNet is essentially a single feed-forward or recurrent network controlling all agents. Each agent is a “module” in that bigger architecture.

Initial Encoding

Each agent encodes its observation into a hidden vector .

Communication Step

Hidden states from all agents are aggregated (summed or averaged). Each agent then receives:

This broadcast signal lets each agent learn from everyone else’s current state.

Hidden State Update

Each agent combines its old hidden state with to get . All of this is differentiable, letting gradients shape the communication content.

Repeat

Multiple “hops” of communication can be stacked.

Action Selection

Finally, each agent’s last hidden state is mapped to an action distribution. Training the entire system end to end with RL ensures the broadcast messages evolve to support the team’s shared goal.

“We propose a model where cooperating agents learn to communicate amongst themselves before taking actions. Each agent is controlled by a deep feed-forward network… Through this channel, they receive the summed transmissions of other agents. However, what each agent transmits on the channel is not specified a-priori, being learned instead.”

3. How Is It Different from RIAL/DIAL?

(a) Fully Continuous Approach

- RIAL had messages as discrete actions, limiting gradient flow.

- DIAL used continuous signals during training but discretized messages at test time.

- CommNet never discretizes messages, simplifying the pipeline.

(b) Simple & Scalable

- Instead of separate modules for environment actions and communication (as in RIAL/DIAL), CommNet merges everything into one large network.

- Agents are identical “blocks” that each receive the aggregated hidden states of the others, making it easy to scale.

(c) Centralized Training, Decentralized Execution

- CommNet, like DIAL, can leverage a “centralized” perspective during training.

- Post-training, each agent just needs its final hidden state (and the broadcast signals) to pick actions.

4. Final Thoughts

CommNet builds directly on the ideas introduced by RIAL and DIAL, pushing learned communication even further. By using fully continuous signals, CommNet avoids the discrete messaging bottleneck and achieves smoother gradient flow across agents. While every multi-agent method still faces issues like noise, partial observability, and scaling to extremely large groups, CommNet remains a compelling demonstration of how we can let agents simply learn to talk to each other—no hardcoded language needed.

Disclaimer: The quotes and references above are adapted from “Learning Multiagent Communication with Backpropagation”.