PaLM-E: A Frontier in Embodied AI and Multimodal Learning

Introduction

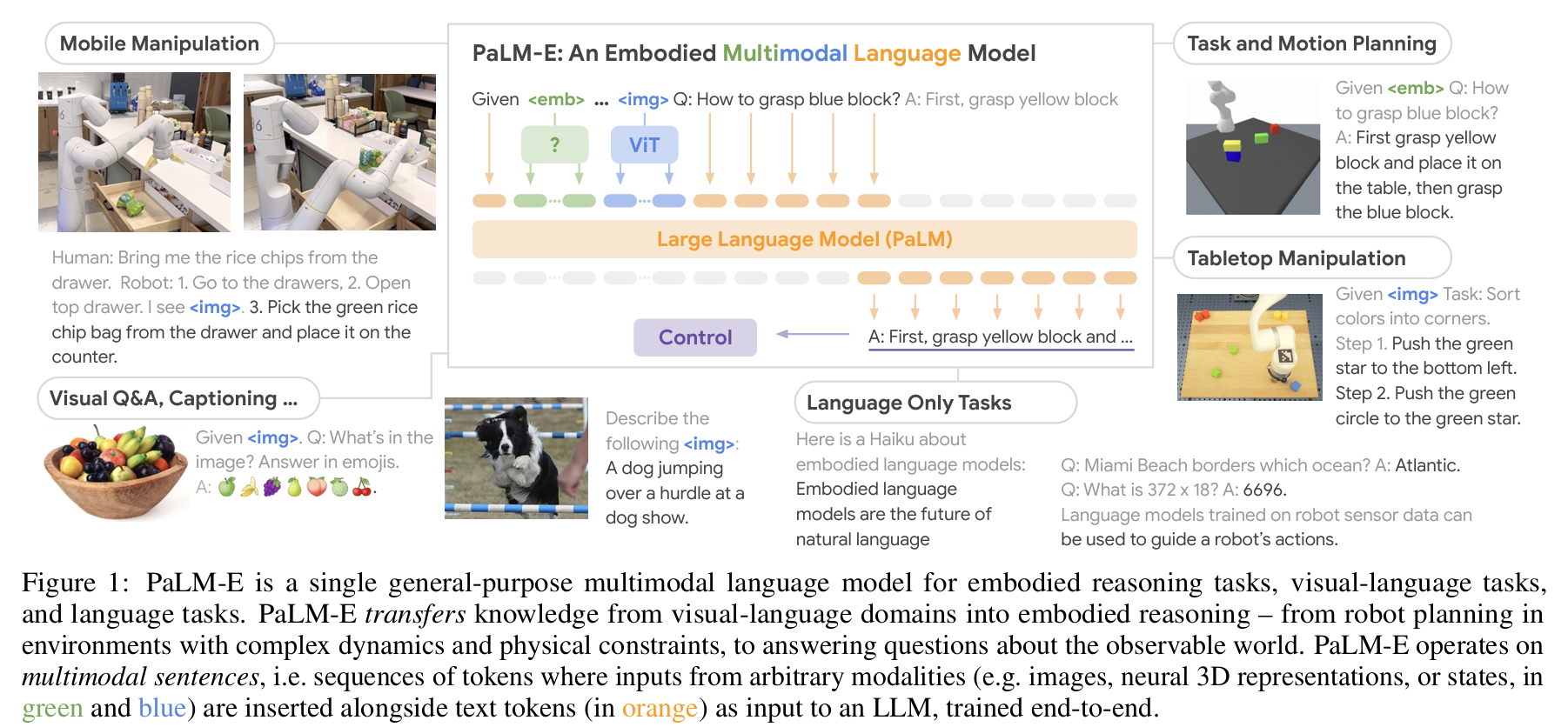

The rapid advancements in large language models (LLMs) have transformed how artificial intelligence interacts with humans. However, real-world applications, such as robotics and embodied AI, require reasoning beyond language processing—integrating perception, action, and decision-making. While LLMs like GPT-4 and PaLM have demonstrated strong capabilities in understanding and generating text, they lack grounding in real-world sensory inputs like images, 3D spatial representations, and continuous sensor data from robotic systems.

PaLM-E, introduced by Driess et al. (2023), tackles this challenge by embedding multimodal sensor data directly into the language model’s token space, effectively linking visual perception, robotics, and natural language reasoning. This approach allows the model to execute long-horizon planning, answer visual queries, and even adapt to dynamic environments, demonstrating remarkable zero-shot generalization in complex real-world scenarios.

Understanding PaLM-E

Imagine you’re at a super fancy dinner party (because AI deserves fine dining too). At the table, you have three guests:

- A brilliant novelist (LLM component) – Knows everything about literature and can talk about any topic but has never actually touched anything.

- A world-class photographer (Vision Transformer, ViT) – Can see and describe everything on the table in great detail but doesn’t know how to interact with it.

- A seasoned chef (Robotic Controller & Sensor Encoder) – Can chop, stir, and cook, but only if someone tells them exactly what to do.

Now, typically, these three don’t work well together. The novelist can describe what a perfect steak looks like but can’t cook it. The photographer can see the steak but doesn’t know what ingredients to use. The chef can cook but doesn’t know what’s on the table.

PaLM-E is like an exceptional party host that gets these three to collaborate seamlessly. By embedding visual information, sensor data, and language processing into a unified system, PaLM-E ensures that the chef can take instructions based on both textual descriptions and real-world observations, the novelist can generate recipes using sensory data, and the photographer can adjust their framing based on physical constraints in the environment.

Key Contributions

The authors introduce PaLM-E as an embodied multimodal language model that unifies textual, visual, and sensor-based inputs. Unlike previous models that relied on modular pipelines (e.g., SayCan, which connects LLMs with robotic policies via affordance functions), PaLM-E is trained end-to-end, allowing it to:

- Ingest continuous sensory inputs (images, neural 3D representations, and state estimations) alongside text tokens.

- Leverage transfer learning from large-scale vision-language datasets to improve robotic reasoning tasks.

- Achieve state-of-the-art results in Visual Question Answering (VQA) and robotic planning tasks, surpassing models designed specifically for either domain.

Mathematical Formulation: A Unified Multimodal Model

Transformer-based Autoregressive Modeling

PaLM-E extends the autoregressive formulation of large language models. Given an input sequence of text tokens , images , and sensor data , the model learns the conditional probability:

where and are encoded into the same token space as text inputs. This allows seamless integration of perceptual and linguistic data within the Transformer’s attention layers.

Vision and Scene Representation Encoders

PaLM-E incorporates two key encoders to process visual and spatial information:

- Vision Transformer (ViT): Converts images into structured embeddings, capturing global object relationships.

- Object Scene Representation Transformer (OSRT): Models 3D spatial relationships, allowing robots to infer object positioning and geometric constraints.

Formally, given an image , the ViT encoder computes:

where is the embedded representation. Similarly, OSRT maps a set of observed images into object-centric slots :

These representations are then projected into the language model’s token space, allowing language-driven spatial reasoning.

Training Objective

PaLM-E is optimized via cross-entropy loss over predicted text sequences:

where is the length of the multimodal prefix. This enables the model to generate robotic commands, captions, or natural language explanations based on multimodal inputs.

Experimental Setup and Results

The authors conduct extensive evaluations in robotic planning, object manipulation, and vision-language tasks. Key benchmarks include:

Task and Motion Planning (TAMP)

PaLM-E is tested on structured block manipulation tasks, where it must plan multi-step actions to achieve a goal (e.g., sorting objects by color). Results indicate that PaLM-E significantly outperforms SayCan, particularly in long-horizon reasoning.

Example Task: Sorting by Color

Given an image of a table with scattered blocks, the model executes:

- Identify color groups using ViT embeddings.

- Generate a sequence of push actions to cluster objects correctly.

- Replan dynamically if objects move unexpectedly.

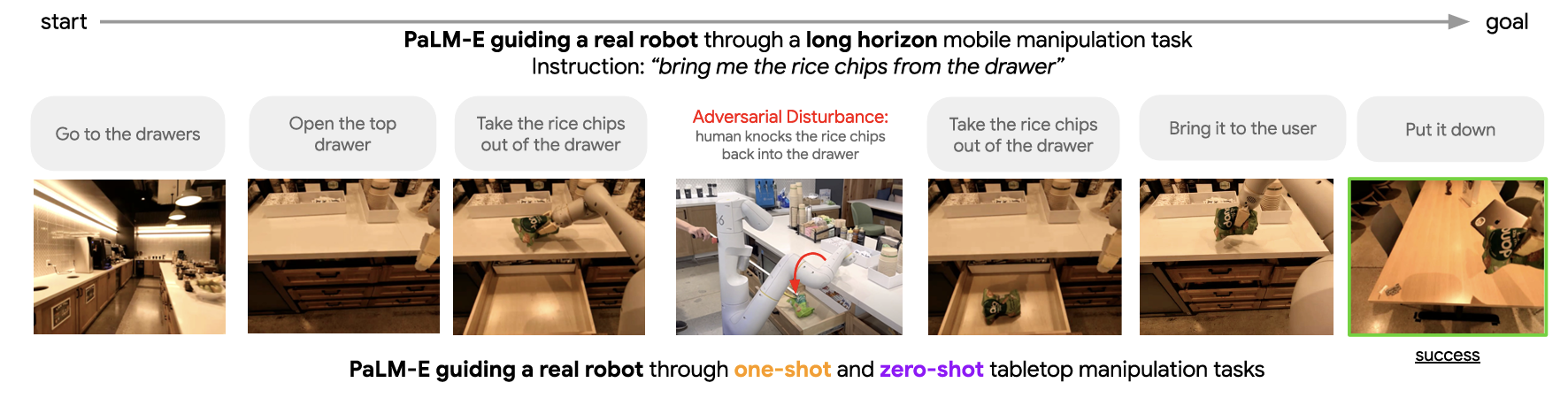

Mobile Manipulation in a Kitchen

In this setting, PaLM-E controls a robot to perform household tasks such as retrieving objects from drawers. The model demonstrates real-time reasoning:

- Detecting object occlusions.

- Adjusting grip strategies based on visual feedback.

- Replanning when failures occur (e.g., dropped objects).

From the paper: “PaLM-E successfully adapts to unexpected failures, leveraging its multimodal reasoning to dynamically update action sequences.”

Zero-Shot Visual Question Answering (VQA)

PaLM-E is evaluated on OK-VQA and COCO captioning benchmarks, where it surpasses task-specific models without additional finetuning. For instance:

- Given an image of fruit in a basket, the model answers: 🍏🍌🍇🍐🍑🍈🍒

- Given an image of a basketball game, it infers: “The player on the left is Kobe Bryant, who won five championship rings.”

This highlights its ability to connect textual and visual reasoning in zero-shot settings.

Discussion and Future Directions

Why Does PaLM-E Matter?

PaLM-E marks a significant step towards generalist AI systems that can seamlessly integrate vision, language, and real-world interaction. Unlike modular approaches that separately handle perception and action, PaLM-E achieves end-to-end multimodal learning, demonstrating:

- Stronger zero-shot generalization in unseen environments.

- Efficient transfer learning from vision-language tasks to robotic control.

- Improved task performance by jointly optimizing across modalities.

Challenges and Open Questions

Despite its success, several challenges remain:

- Real-world deployment: While PaLM-E shows strong simulation results, scalability to diverse real-world settings requires further testing.

- Long-term planning: The model still struggles with multi-step reasoning in highly dynamic environments.

- Computational efficiency: Training and inference on PaLM-E-562B (562 billion parameters) is resource-intensive, raising concerns about scalability and accessibility.

Future Research Directions

- Integrating reinforcement learning: Combining PaLM-E with reinforcement learning (RL) policies could improve adaptation in changing environments.

- Exploring hierarchical memory architectures: Adding memory mechanisms to retain long-term dependencies could enhance planning.

- Fine-tuning for domain-specific applications: Adapting PaLM-E for healthcare robotics, autonomous vehicles, and industrial automation presents exciting possibilities.

Mobile Manipulation

One of the strengths of PaLM-E is its ability to act as a high-level policy generator for robotic tasks. While it can reason over long-horizon planning, multimodal perception, and natural language instructions, the actual execution of low-level actions—such as grasping, navigation, and fine motor control—is handled by a separate policy. In principle, PaLM-E can be paired with any low-level controller, but in this work, the authors use RT-1 (Brohan et al., 2022) as an example.

This separation follows a hierarchical control paradigm:

- PaLM-E provides high-level task decomposition: It generates a structured sequence of actions to complete a task.

- A low-level controller (such as RT-1) executes fine-grained motor actions: It translates high-level instructions into robot-specific commands.

This modular approach means PaLM-E is not limited to RT-1—it can be used with other reinforcement learning (RL)-based policies, classical control models, or even imitation learning approaches, depending on the deployment context.

Example Scenario: Cleaning a Spill

Consider a real-world interaction where a robot assistant is asked:

“I spilled my drink, can you bring me something to clean it up?”

To complete this task, the robot must:

- Understand the instruction and generate an action plan.

- Search for and locate a cleaning tool (e.g., a sponge or napkin).

- Pick up the object and transport it to the user.

- Place the object within reach for the user to clean up.

Step 1: High-Level Planning with PaLM-E

PaLM-E takes the natural language input and generates a structured sequence of high-level actions:

- Find a sponge or napkin.

- Pick it up.

- Navigate to the user.

- Deliver the item.

Each of these high-level actions is expressed as a language instruction that can be fed to a lower-level controller.

Step 2: Low-Level Execution Using RT-1

Since the execution of each action requires fine-grained motor control, PaLM-E hands over control to RT-1, a transformer model trained for robotic manipulation. Given RGB camera input and the high-level instruction (e.g., “Pick up the sponge”), RT-1 computes the appropriate end-effector commands to grasp the object.

For example:

- Navigation to the sponge: The robot moves toward the location identified by PaLM-E.

- Object grasping: RT-1 uses visual attention to detect and pick up the object.

- Navigation to the user: The robot follows a trajectory generated by PaLM-E.

- Object placement: RT-1 controls the final release motion to place the sponge within reach.

Why This Modular Approach Works

The key advantage of PaLM-E acting as a high-level planner is that it allows flexibility in selecting the best low-level control policy for the task at hand. While RT-1 is used in this work, other controllers could be used instead, such as:

- Reinforcement Learning (RL) Policies: For tasks requiring adaptive learning in dynamic environments.

- Imitation Learning Models: Where robots learn from human demonstrations.

- Classical Motion Planning Algorithms: Such as RRT* or A* for structured navigation tasks.

This flexibility makes PaLM-E highly generalizable, allowing it to integrate with different robotic systems depending on their specific needs.

Final Thoughts: The Power of Combining High-Level and Low-Level Policies

By allowing PaLM-E to focus on multimodal reasoning and planning, and leaving the execution of motor actions to a separate controller, this approach achieves both flexibility and robustness. The hierarchical nature of this framework means that PaLM-E can be integrated with different robotic platforms, control architectures, and sensor configurations, making it a scalable and adaptable solution for embodied AI.

Conclusion

PaLM-E represents a paradigm shift in embodied AI, bridging the gap between language models, visual understanding, and robotic control. By embedding sensor data into LLM token spaces, it enables end-to-end multimodal learning, demonstrating strong generalization across tasks. While challenges remain, PaLM-E lays the groundwork for more capable and adaptable AI systems, moving us closer to truly general-purpose intelligent agents.

Disclaimer: The quotes and references above are adapted from “PaLM-E: An Embodied Multimodal Language Model”.